Introduction

I have created a highly-available web hosting cluster, run completely by Ansible. Servers are created and deleted as needed, scalability provided by running this on Digital Ocean’s cloud. Common problems get noticed, and fixed, all without user intervention. This infrastructure is highly specialized and complex, and this post will help explain it. It will be required to understand future posts on this system.

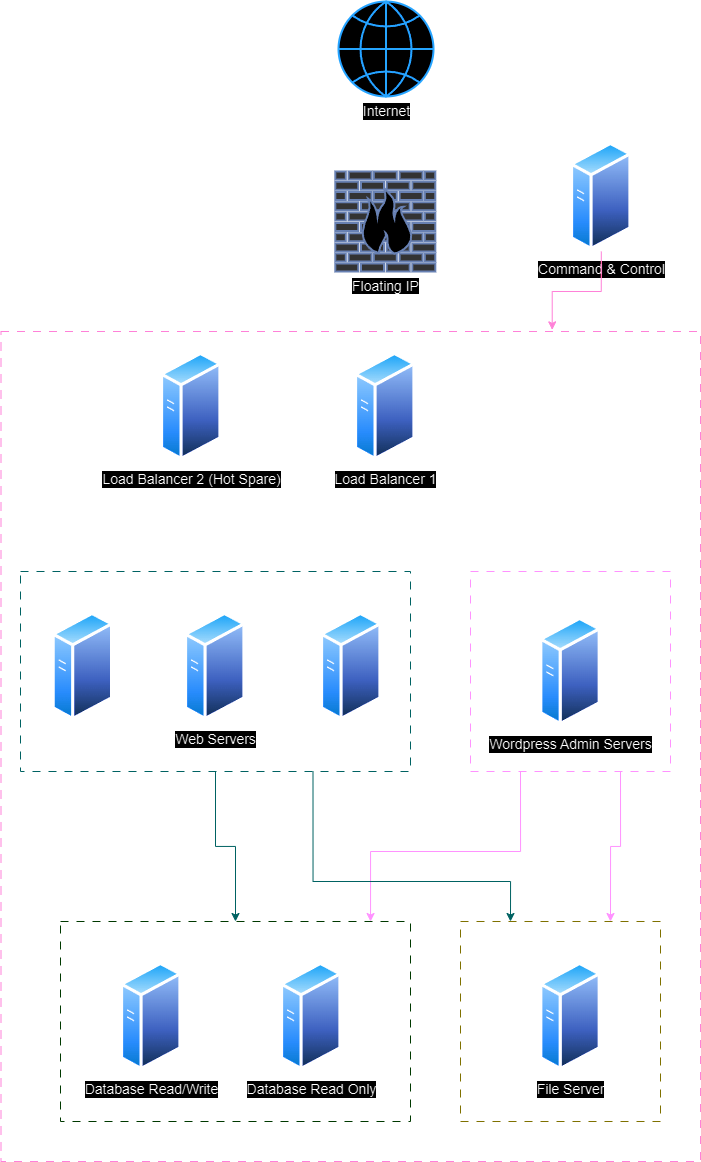

The Complete Picture

This looks like a lot, but we will break it down below. Some things to note about the system as a whole:

- All virtual servers are running Ubuntu Server 24.02

- The only servers with public internet access are the load balancers and the command & control server. Everything else is done inside of a private LAN between the servers.

- The servers are divided into groups based on their function. This allows computing to be used efficiently as computing resources are scaled independently for each role.

- The following groups can scale horizontally by adding more servers: Web Servers, Wordpress Admin servers, and Database servers.

- All of the servers can scale vertically via Digital Ocean’s control panel or API.

- Almost all of the websites we are hosting are mostly static websites for businesses that do not need live updates.

Network Layout

As mentioned above, only the Load balancers and Command & Control server can be accessed over the internet. Digital Ocean lets you “tag” servers with info about them. Each server in this cluster is tagged with its role,backend or frontend, and a web_cluster tag. These tags do 3 big things:

- They assign firewalls to the droplets based on the role tags the droplet has. This is done at the provider level so the server is protected from the moment it is created, before it even boots.

backendtag means the server should not be accessible from the internet, andfrontendis.web_clusteris used to create the private lan between all of the droplets in the cluster. This keeps other resources in the same Digital Ocean account from being able to access the cluster.

Command and Control

In the top left of the above diagram you’ll see a single server labeled Command and Control. This is the server that I access to actually run the ansible commands when needed. I can SSH into the server but Ansible Semaphore gives me a nice Web UI and a scheduler (More on this in a future post). It can access all of the servers in the cluster using the private LAN.

Ansible Inventory

An inventory is generated at run time of each playbook by using a Dynamic Inventory. Making the inventory dynamic lets me destroy and create servers at will, Ansible will always know how many servers are in the cluster.

Monitoring

In addition to running all of the Ansible Playbooks, this server also runs Zabbix. Zabbix lets me monitor all of the servers in the cluster and alerts from this system are one of the ways the cluster can grow, contract or heal. When new servers are created, they check in with Zabbix and based on server name, get assigned to monitoring groups in Zabbix.

Load Balancer

Web traffic comes in from the internet to a Digital Ocean floating IP. It is an IPv4 address that I can point to whatever server I want to. If I want to switch from Load Balancer 1 to the hot spare, I just need to move the floating IP via the control panel or API.

Once it reaches the load balancer, the traffic is routed to 2 different backends by HAProxy. If the request is for a WordPress admin page, it is sent to the WP Admin servers, everything else gets sent to the regular web servers. More info on this splitting of traffic here.

Thanks to Cloudflare origin server SSL certificates, all SSL is terminated here. This was done to free as many resources as possible for the web servers, more CPU time for actually serving web pages.

Web Servers

The web servers all run Nginx and PHP-FPM for serving web pages. The config files and actual website files for all of the websites hosted are stored on the File Server. Putting all of the website configs in a shared location speeds up adding a new web server, as configs do not need to be copied before nginx starts.

Caching

All WordPress sites on the cluster use a Fast-CGI proxy to speed up page loads. All of the PHP code for a page is run, and the results are saved as flat HTML files and served from cache for 1 hour before a new copy is generated. Doing this has many benefits:

- The static pages are saved to each web server’s local NVME drive and served from there, making page loads very fast.

- File Server load is much lower as it is accessed considerably less often.

- Google Search rankings for every site went up as google ranked them higher due to much faster page loads

- If the cluster is under heavy load and additional web nodes are added, the new nodes get total access to the file server as existing web servers are already serving from their local cache.

Clearing Web Server Cache

Clearing a website’s FastCGI cache is a simple playbook:

|

|

Web Server Performance

With all of the optimizations , each web server can handle about 700 requests per second maximum. 500 per second is used as a maximum for decisions on adding more web servers.

WP Admin Servers

Why split WordPress admin tasks to its own server? A couple reasons. By default WordPress websites check if they need to do background tasks on every page load. This will slow down the site and is not optimal. You can disable this feature and handle the scheduled tasks manually. The first WP Admin server is always in charge of that. A list of WordPress websites is kept on the file server and the server will use that to trigger the scheduled tasks for all websites.

Wordpress Site Updates

Another great reason is update management. Since all wp-admin URLs come to this server, all updates will happen on this server. This frees up the web servers to serve more web pages to guests. With that in mind, we use WP-CLI to automatically update all WordPress websites system files, themes, and plugins, every night. The speed of WP-CLI is also much faster than any other update management system tested.

Finally, we can horizontally scale this server or servers when we want to do big admin tasks to to reduce time needed to complete them.

Database Servers

Both the Web servers and WP-Admin servers connect to the same Database set. 2 servers running MariaDB. It is currently 1 read/write server and 1 read only server. 3 nodes is optimal for high availability, but current load on the cluster is not high enough for that. The 2 database servers are not both read/write to prevent possible split brain issues if the nodes disagree on data.

Nightly backups are made of the main database server for faster recovery from possible disaster.

Setting up Wordpress for multiple DB Servers

Wordpress sites are made aware of the 2 database servers by using the HyperDB database plugin. Ansible also makes it very easy to generate new configuration files when database servers are added or deleted.

File Servers

Shared file storage is provided by a single NFS server. Website data, Nginx configs, and Nginx snipets are stored here. This is the weakest point in terms of high availability but alternatives had major issues. GlusterFS and Ceph were trialed, but did not do well with the lots of small files data style that WordPress sites use.

Backups of the shared data are created nightly, and uploaded to storage outside of Digital Ocean for additional disaster recovery.

Final Notes

This cluster has been running flawlessly for 3 years now and uptime is extremely good. 1 10 minute downtime the entire previous year! I will be sharing more about this cluster soon, with more ansible playbooks.